Multimodal Sentiment Sensing and Emotion Recognition Based on Cognitive Computing Using Hidden Markov Model with Extreme Learning Machine

DOI:

https://doi.org/10.17762/ijcnis.v14i2.5496Keywords:

multimodal content, emotion recognition, social media review, HMM_ExLM, decision level fusionAbstract

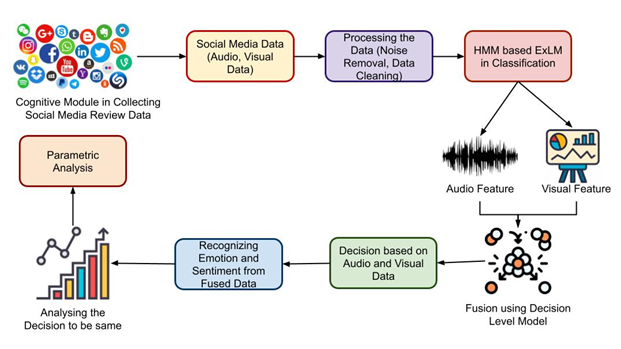

In today's competitive business environment, exponential increase of multimodal content results in a massive amount of shapeless data. Big data that is unstructured has no specific format or organisation and can take any form, including text, audio, photos, and video. Many assumptions and algorithms are generally required to recognize different emotions as per literature survey, and the main focus for emotion recognition is based on single modality, such as voice, facial expression and bio signals. This paper proposed the novel technique in multimodal sentiment sensing with emotion recognition using artificial intelligence technique. Here the audio and visual data has been collected based on social media review and classified using hidden Markov model based extreme learning machine (HMM_ExLM). The features are trained using this method. Simultaneously, these speech emotional traits are suitably maximised. The strategy of splitting areas is employed in the research for expression photographs and various weights are provided to each area to extract information. Speech as well as facial expression data are then merged using decision level fusion and speech properties of each expression in region of face are utilized to categorize. Findings of experiments show that combining features of speech and expression boosts effect greatly when compared to using either speech or expression alone. In terms of accuracy, recall, precision, and optimization level, a parametric comparison was made.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2022 Author

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.