Optimization of Deep Convolutional Neural Network with the Integrated Batch Normalization and Global pooling

DOI:

https://doi.org/10.17762/ijcnis.v15i1.5617Keywords:

Neural network, weight optimisation, pooling, convolution, deep learning, normalisation.Abstract

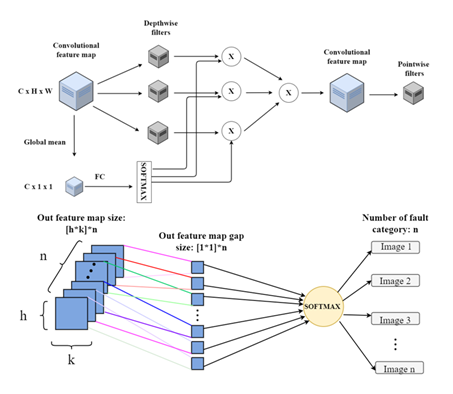

Deep convolutional neural networks (DCNN) have made significant progress in a wide range of applications in recent years, which include image identification, audio recognition, and translation of machine information. These tasks assist machine intelligence in a variety of ways. However, because of the large number of parameters, float manipulations and conversion of machine terminal remains difficult. To handle this issue, optimization of convolution in the DCNN is initiated that adjusts the characteristics of the neural network, and the loss of information is minimized with enriched performance. Minimization of convolution function addresses the optimization issues. Initially, batch normalization is completed, and instead of lowering neighborhood values, a full feature map is minimized to a single value using the global pooling approach. Traditional convolution is split into depth and pointwise to decrease the model size and calculations. The optimized convolution-based DCNN's performance is evaluated with the assistance of accuracy and occurrence of error. The optimized DCNN is compared with the existing state-of-the-art techniques, and the optimized DCNN outperforms the existing technique.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 International Journal of Communication Networks and Information Security (IJCNIS)

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.